Paper: Steven McCanne, Van Jacobson, Martin Vetterli, Receiver-driven Layered Multicast

Lecture date: 11/4/99

Prof. John Byers

Scribe: Igor Stubailo

Multicast routing has been widely studied and good solutions are known.

Multicast transport is

more interesting.

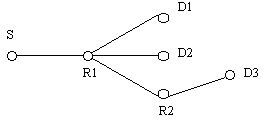

So, we have one or more sources which inject packets into the network

and multitargets.

What problems can we experience?

1) ack implosion which is equivalent to # of receivers,

nack implosion which is

equivalent to # of receivers that haven't received a packet

2) you don't want to transmit duplicate packets to

those receivers that have got all data

3) recovery latency - you wish to efficiently retransmit

a packet

4) recovery isolation - you don't want to worry other

receivers if isolated packet lost somewhere

5) adaptation to membership changes

Routers are doing more than just forwarding packets, they need to forward

packets to some receivers

over multiple links (duplicate packets). Establishing routes is challenging.

Participants can leave and join: videoconferencing, MBone, broadcasting

seminars.

How joining and leaving the group is established?

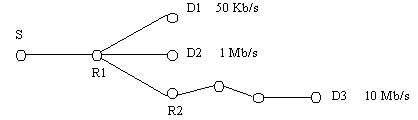

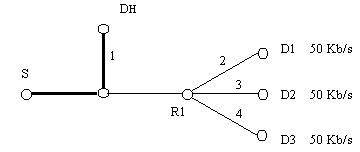

The router which receive "leave", detach ,for example, D3 and if nobody

else interested in receiving

packages then router R2 send a message to R1 and is being detached

also. In this case S doesn't

care about leaving and joining of members. So, leaving is easy

and routers must process messages.

Joining is similar. First you have to be aware of the address.

Here we are talking about IP multicast -

session have addresses - refer to collections of hosts.

Once D3 finds out about multicast address it sends "join" message and is joined by the shortest path.

Now we are interested in videoconferensing over multicast tree.

What some of the obstacles are? The goal here is the following: stream

a video to a large number of clients.

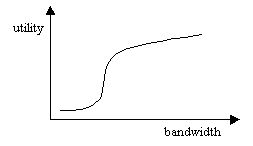

We have just rough picture of utility function u, and Max

U = Sum(U_i (s_i)).

Adaptive video codecs

/ \

/ \

unicast multicast

where unicast adapts on the fly to the "available" b/w. Multicast

is not so straightforward and heterogeneous

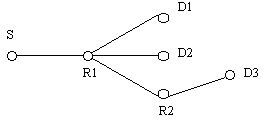

b/w's receive rates cause problems. Let's look at the following

topology:

If you want to transmit a video stream you have to transmit it by the

lowest rate, but on the other hand

it's unfair to "high" users. Also, you can transmit "middle"

rate - then some slow users cannot participate.

And the solution here is layering, where everyone can accept data by

it's own rate.

Multiple layers (ML)

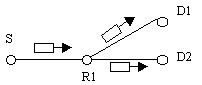

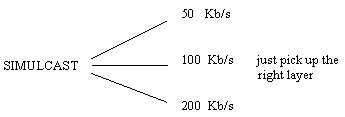

In a context of ML one approach is simulcast, i.e. instead of having

one session you could have 3 disjoint

sessions:

This approach is not widely used. The better one is cumulative progressive

improvement.

Like: the base layer is 50 Kb/s

the second layer is 50 Kb/s

the last layer is 100 Kb/s

So, if you subscribe to all layers you getting 200 Kb/s. While in simulcast

you demand

50 + 100 + 200 = 350 Kb/s.

Receiver-driven Layered Multicast (RLM)

Prerequisite: video codecs

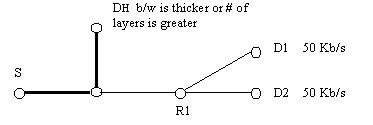

Given codecs and you have multiple multicast groups (one for each layer).

Receivers can adjust to

different rates. The sender is going to choose the rates on the layers,

it's depend on applications

and receiver population. And for those layers we are going to use geometric

rates, like 1, 2, 4, 8, 16, ...

So, if your b/w is 12 you can use only 8.

The sender doesn't know what destinations are. It only chooses rates

for layers. The entities which are

adaptive are receivers, they can make decisions based on what they

observe.

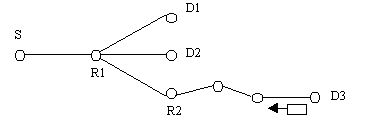

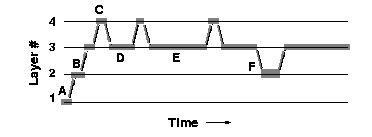

Now let's new user comes in:

it subscribes to the base layer, but can it get more?

Algorithm.

The idea is the following:

How those joint experiments works?

How long the horizontal line can be? You actually double it every time

you experience congestion,

it's exponential backup on b/w failures.

As the layer increases, the joint times increases also. We can say that

joint exper. are good for a single

user. For multiple users joint experiments can induce loss at

non participating receivers. The question here is:

how can we coordinate joint experiments?

Note: this paper assumes IP multicast, best-effort service, and that all receivers are running RLM.

The trick here is the following: you can announce to your neighbors

that you are going to run joint

experiment. If some receivers send messages at the same time then,

because of collision, they back off

with random waiting time stamp.

In general, don't treat joint experiment as a congestion.

But you can learn smth from other receivers joint experiments.

What if the receiver run j. ex. for the same

link you care about and it succeeded, then you should change your timer

to lower (smaller) number.

That's how can you benefit. Also if it's not succeeded, change your

timer to larger number.

(the end of lecture time)