To communicate, a person with severe motion impairments can use the Camera Mouse with on-screen text-entry software. With this combined assistive technology, the user is able to participate in the information society, for example, by emailing friends, browsing the Web, or posting twitter messages. The Camera Mouse is used by children and adults of all ages, in schools for children with physical or learning disabilities, e.g., Boston College Campus School, long-term care facilities for people with Multiple Sclerosis and other progressive neurological diseases, e.g., The Boston Home, hospitals, and private homes. It is available for free download at www.cameramouse.org. The website provides testimonials of Camera Mouse users and their families from all over the world. Some users have their own websites:

Prof. Betke's research group will continue to develop innovative assistive software for a variety of common applications including text entry, web browsing, picture editing, twittering, etc. A current focus is designing software that learns about and adapts to the user's needs. Ongoing research efforts also involve development of a tracking system that overcomes the problem of feature drift and of a multi-camera computer vision system that serves as an improved interface for people with severe motion impairments. The research group will explore context-aware approaches for gesture detection that can distinguish a user's communicative motions from involuntary movements or social interactions. The following book chapter gives an overview of the research efforts by Betke's group:M. Betke. "Intelligent Interfaces to Empower People with Disabilities." In Handbook of Ambient Intelligence and Smart Environments. H. Nakashima, J. C. Augusto, and H. Aghajan (Editors), Springer Verlag. 2010. ISBN: 0387938079. Introduction, pdf file.

A fundamental change of the tracking mechanism of the original Camera Mouse has been proposed that leverages kernel projections, which are traditionally associated with machine learning. In the Augmented Camera Mouse, the interface first learns an Active Hidden Model of the appearance of the user's facial feature while he or she is using the Camera Mouse, and then tracks the feature by matching its current appearance to this model. The Augmented Camera Mouse was empirically shown to be very resilient to feature drift.

S. Epstein, E. Missimer and M. Betke. "Using kernels for a video-based mouse-replacement interface," Personal and Ubiquitous Computing, 18(1):47-60, January 2014. pdf .S. Epstein and M. Betke. "Active Hidden Models for Tracking with Kernel Projections," Department of Computer Science Technical Report BUCS-2009-006, Boston University, pdf, abstract.

The Camera Mouse can lose the facial feature it is supposed to track. This sometimes happens when the user makes a rapid movement, maybe due to a spastic condition. It also occurs when the facial feature is not in the camera view at all times because the user turned or tilted his or her head significantly, or because a caregiver occluded the view, for example, while wiping the user's face. A new version of the Camera Mouse can detect and recuperate from feature loss. If it detects that the feature has been lost, it uses a carefully-designed multi-phase search process to find the subimage in the current video frame with the best correlation to the image of the initially tracked feature.

C. Connor, E. Yu, J. Magee, E. Cansizoglu, S. Epstein, and M. Betke, 2009. "Movement and Recovery Analysis of a Mouse-Replacement Interface for Users with Severe Disabilities." 13th International Conference on Human-Computer Interaction (HCI International 2009), San Diego, CA, July 2009. 10 pp. pdf.Combining eye-gaze and head-movement interaction

Augmentative and alternative communication tools allow people with severe motion disabilities to interact with com- puters. Two commonly used tools are video-based interfaces and eye trackers. Video-based interfaces map head movements captured by a camera to mouse pointer movements. Alternatively, eye trackers place the mouse pointer at the estimated position of the user's gaze. Eye tracking based interfaces have been shown to even outperform traditional mice in terms of speed, however the accuracy of current eye trackers is not sufficient for fine mouse pointer placement. We proposed the Head Movement And Gaze Input Cascaded (HMAGIC) pointing technique that combines head movement and gaze-based inputs in a fast and accurate mouse-replacement interface. The interface initially places the pointer at the estimated gaze position and then the user makes fine adjustments with their head movements. We conducted a user experiment to compare HMAGIC with a mouse-replacement interface using only head movements to control the pointer. Our experimental results indicate that HMAGIC is significantly faster than the head-only interface while still providing accurate mouse pointer positioning.

A. Kurauchi, W. Feng, C. Morimoto, and M. Betke. "HMAGIC: Head Movement And Gaze Input Cascaded Pointing," 8th International Conference on Pervasive Technologies Related to Assistive Environments, (PETRA), Corfu, Greece, July 1-3, 2015, 5 pages, pdfMulti-camera Interfaces

Prof. Betke's research group has started to develop a multi-camera interface system that serves as an improved communication tool for people with severe motion impairments. The group is working on a multi-camera capture system that can record synchronized images from multiple cameras and automatically analyze the camera arrangement.

In a preliminary experiment, 15 human subjects were recorded from three cameras while they were conducting a hands-free interaction experiment. The three-dimensional movement trajectories of various facial features were reconstructed via stereoscopy. The analysis showed substantial feature movement in the third dimension, which are typically neglected by single-camera interfaces based on two-dimensional feature tracking.

Blink and Wink InterfaceE. Ataer-Cansizoglu and M. Betke. "An information fusion approach for multiview feature tracking." In 20th International Conference on Pattern Recognition (ICPR), August 23-26, 2010, Istanbul, Turkey. IAPR Press, August 2010, 4 pp.

J. Magee, Z. Wu, H. Chennamaneni, S. Epstein, D. H. Theriault, and M. Betke. "Towards a multi-camera mouse-replacement interface." In A. Fred, editor, The 10th International Workshop on Pattern Recognition in Information Systems - PRIS 2010. In conjunction with ICEIS 2010, Madeira, Portugal - June 2010, pages 33-42, INSTICC Press, 2010. pdf.

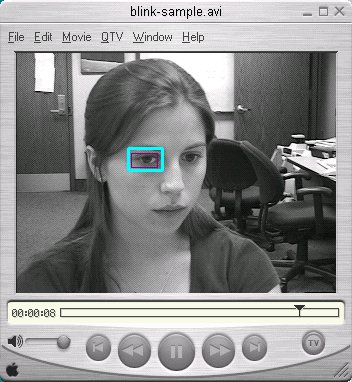

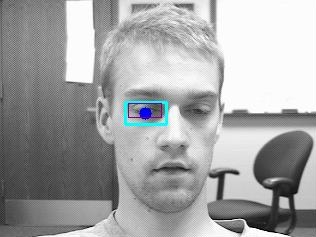

The research group has recently re-focused its efforts on improving the mechanism to simulate mouse clicks. The current Camera Mouse software limits the user to left-click commands which the user executes by hovering over a certain location for a predetermined amount of time. For users who can control head movements and can wink with one eye while keeping their other eye visibly open, the new "blink and wink interface" allows complete use of a typical mouse, including moving the pointer, left and right clicking, double clicking, and click-and-dragging. For users who cannot wink but can blink voluntarily the system allows the user to perform left clicks, the most common and useful mouse action.

E. Missimer and M. Betke. "Blink and wink detection for mouse pointer control." The 3rd ACM International Conference on Pervasive Technologies Related to Assistive Environments (PETRA 2010), Pythagorion, Samos, Greece. June 2010. 8 pp. pdf.Youtube Video of Blink & Wink Version of Camera Mouse by Eric Missimer, June 2010

The Speaking with Eyes section below describes the group's earlier efforts in developing blink detection algorithms.

Assistive Software

E. Missimer, S. Epstein, J. J. Magee, and M. Betke. "Customizable keyboard." In The 12th International ACM SIGACCESS Conference on Computers and Accessibility (ASSETS 2010), Orlando, Florida, USA, October 2010.

J. J. Magee, S. Epstein and E. Missimer, and M. Betke. "Adaptive mappings for mouse-replacement interfaces." In The 12th International ACM SIGACCESS Conference on Computers and Accessibility (ASSETS 2010), Orlando, Florida, USA, October 2010.

J. Magee and M. Betke. "HAIL: hierarchical adaptive interface layout." In K. Miesenberger et al., editor, 12th International Conference on Computers Helping People with Special Needs (ICCHP 2010), Vienna University of Technology, Austria, Part 1, LNCS 6179, pages 139-146. Springer-Verlag Berlin Heidelberg, July 2010. pdf. Abstract.

S. Deshpande and M. Betke. "RefLink: An Interface that Enables People with Motion Impairments to Analyze Web Content and Dynamically Link to References." The 9th International Workshop on Pattern Recognition in Information Systems (PRIS 2009), Milan, Italy, May 2009, A. Fred (editor), pages 28-36, INSTICC Press. pdf.

The original idea for Camera Mouse was developed by Prof. Margrit

Betke and Prof. James Gips and described in publications in

the proceedings of the 2000 RESNA Conference [pdf] and in the IEEE Transactions on Neural

Systems and Rehabilitation Engineering in 2002 [pdf]. For several years the Camera Mouse

technology was licensed by Boston College to a start-up company called

Camera Mouse, Inc., which developed a commercial version of the

program. With the demise of the company and the revocation of the

license by Boston College, Jim Gips and Margrit Betke decided to

develop a new version of the program and make it available for free on

the web in 2007. Many of their students have contributed to the

development of Camera Mouse.

The original idea for Camera Mouse was developed by Prof. Margrit

Betke and Prof. James Gips and described in publications in

the proceedings of the 2000 RESNA Conference [pdf] and in the IEEE Transactions on Neural

Systems and Rehabilitation Engineering in 2002 [pdf]. For several years the Camera Mouse

technology was licensed by Boston College to a start-up company called

Camera Mouse, Inc., which developed a commercial version of the

program. With the demise of the company and the revocation of the

license by Boston College, Jim Gips and Margrit Betke decided to

develop a new version of the program and make it available for free on

the web in 2007. Many of their students have contributed to the

development of Camera Mouse.

The original Camera Mouse used a visual tracking algorithm that was based on cropping a template of the tracked feature from the current image frame and testing where this template correlates in the subsequent frame. The location of the highest correlation was interpreted as the new location of the feature in the subsequent frame. The 2002 IEEE publication describes that twelve people with severe cerebral palsy or traumatic brain injury had tried the original Camera Mouse, nine of whom had shown success. They interacted with their environment by spelling out messages and exploring the internet.

The research group conducted several experiments with early Camera Mouse users to quantitatively and qualitatively evaluate the performance of the system for different users, different facial features to be tracked, and different applications. For example, as described by Cloud et al. in 2002, study subjects repeatedly performed a number of pointer-movement and text-entry tasks. During each trial of the experiment, a different facial feature was tracked and the elapsed time and mouse movement trajectories were measured.

The research group investigated early on whether the tracking mechanism of the original Camera Mouse, normalized correlation, should be substituted by other computer vision techniques. Fagiani et al., 2002, performed a comparison with the Lucas-Kanade optical flow tracker. Both methods were evaluated with and without multidimensional Kalman filters. Two-, four-, and six-dimensional filters were tested to model feature location, velocity, and acceleration. The various tracker and filter combinations were evaluated for accuracy, computational efficiency, and practicality. The normalized correlation coefficient tracker without Kalman filtering was found to be the tracker best suited for a variety of human-computer interaction tasks. Nonetheless, when the Camera Mouse was reimplemented to take advantage of the OpenCV library, which is widely used by the computer vision research community, the research group decided to switch to the Lucas-Kanade optical flow tracking method which was better supported by the library than normalized correlation at the time.

M. Betke J. Gips, and P. Fleming, "The Camera Mouse: Visual Tracking of Body Features to Provide Computer Access For People with Severe Disabilities." IEEE Transactions on Neural Systems and Rehabilitation Engineering, 10:1, pp. 1-10, March 2002. pdf. PubMed Entry.

J. Gips, M. Betke, P. Fleming, "The Camera Mouse: Preliminary Investigation of Automated Visual Tracking for Computer Access." Proceedings of the Rehabilitation Engineering and Assistive Technology Society of North America 2000 Annual Conference, RESNA 2000, pp. 98-100, Orlando, FL, July 2000. pdf.J. Gips, M. Betke, and P. A. DiMattia, "Early Experiences Using Visual Tracking for Computer Access by People with Profound Physical Disabilities." In C. Stephanidis, editor, Universal Access in HCI: Towards an Information Society for All: 1st International Conference on Universal Access in Human-Computer Interaction, UAHCI 2001 , pp. 914-918, New Orleans, LA, August 2001. Lawrence Erlbaum Associates, Publishers. pdf.

R. L. Cloud, M. Betke, J. Gips, "Experiments with a Camera-Based Human-Computer Interface System." Proceedings of the 7th ERCIM Workshop "User Interfaces for All," UI4ALL 2002, pp. 103-110, Paris, France, October 2002. pdf.

J. Gips, P. DiMattia, M. Betke, "Collaborative Development of New Access Technology and Communication Software," 10th Biennial Conference of the International Society for Augmentative and Alternative Communication, ISAAC 2002, Odense, Denmark, August 2002, pdf.

C. Fagiani, M. Betke, and J. Gips, "Evaluation of Tracking Methods for Human-Computer Interaction." Proceedings of the IEEE Workshop on Applications in Computer Vision (WACV 2002), pp. 121-126, Orlando, Florida, December 3-4, 2002. pdf.

Kristen Grauman, while a student at Boston College and summer intern at Intel, with Margrit Betke, Jim Gips, and Gary Bradski, developed a real-time vision system, the "Blink Link," that automatically detected a user's eye blinks and accurately measured their durations. Voluntary long blinks triggered mouse clicks, while involuntary short blinks were ignored. The system enabled communication using "blink patterns:" sequences of long and short blinks which were interpreted as semiotic messages. The location of the eyes was determined automatically through the motion of the user's initial blinks. Subsequently, the eye was tracked by correlation across time, and appearance changes were automatically analyzed in order to classify the eye as either open or closed at each frame. No manual initialization, special lighting, or prior face detection was required. The system was tested by users without disabilities with interactive games and a spelling program.

The 2001 Blink Link, which relied on a special hardware, a Matrox image-capture board, was ported to a system with a USB webcam by Betke's Master's student Mike Chau in 2005. Recently, Betke's PhD student Eric Missimer has developed a system that significantly extends the functionality of the Blink Link. For users who can control head movements and can wink with one eye while keeping their other eye visibly open, the new blink and wink interface allows complete use of a typical mouse, including moving the pointer, left and right clicking, double clicking, and click-and-dragging. For users who cannot wink but can blink voluntarily the system allows the user to perform left clicks, the most common and useful mouse action.

K. Grauman, M. Betke, J. Gips, G. R. Bradski, "Communication via Eye Blinks - Detection and Duration Analysis in Real Time," Proceedings of the IEEE Computer Vision and Pattern Recognition Conference CVPR 2001, Kauai, Hawaii, December 2001.K. Grauman, M. Betke, J. Lombardi, J. Gips, and G. Bradski, "Communication via Eye Blinks and Eyebrow Raises: Video-Based Human-Computer Interfaces." Universal Access in the Information Society, 2(4), 359-373, November 2003.

M. Chau, and M. Betke. "Real Time Eye Tracking and Blink Detection with USB Cameras." Department of Computer Science Technical Report BUCS-2005-012, Boston University. April 28, 2005. Abstract, pdf.

E. Missimer and M. Betke. "Blink and wink detection for mouse pointer control." The 3rd ACM International Conference on Pervasive Technologies Related to Assistive Environments (PETRA 2010), Pythagorion, Samos, Greece. June 2010. 8 pp. pdf.

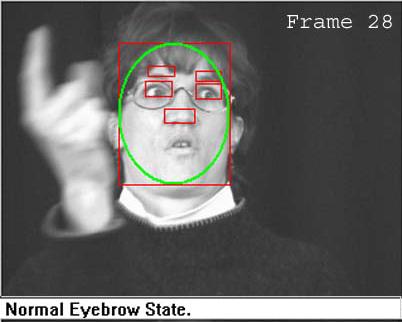

Margrit Betke's student Jonathan Lombardi developed another mechanism to initiate mouse clicks -- eyebrow raises. The Eyebrow-Clicker is a camera-based human computer interface system that implements a new form of simulated binary switch. When the user raises his or her eyebrows, the binary switch is activated and a selection command is issued. The Eyebrow-Clicker thus replaces the "left-click" functionality of a mouse.

As the original BlinkLink, the Eyebrow-Clicker was intended to provide an alternate input modality to allow people with severe disabilities to access a computer and was initially tested only with users without motion impairments. Early Camera Mouse users had conditions (e.g., cerebral palsy) that did not enable them to control the muscles that moved their eye lids and eyebrows. Only recently has the research group worked with a stroke victim who can control these muscles and in fact relies on "eye opening" as his only mechanism for communication. The research group will report on the experiences of this user in the near future.

J. Lombardi and M. Betke, "A Camera-based Eyebrow Tracker for Hands-free Computer Control via a Binary Switch." 7th ERCIM Workshop "User Interfaces for All," UI4ALL 2002, pp. 199-200, Paris, France, October 2002. pdf.

K. Grauman, M. Betke, J. Lombardi, J. Gips, and G. Bradski, "Communication via Eye Blinks and Eyebrow Raises: Video-Based Human-Computer Interfaces." Universal Access in the Information Society, 2(4), 359-373, November 2003.

J. J. Magee, M. Betke, J. Gips, M. R. Scott, B. N. Waber, "A human-computer interface using symmetry between eyes to detect gaze direction," IEEE Transactions on Systems Man & Cybernetics, 38(6):1-14, November 2008. Abstract. Pdf file. Video.

J.J. Magee, M.R. Scott, B.N. Waber and M. Betke. "EyeKeys: A Real-time Vision Interface Based on Gaze Detection from a Low-grade Video Camera." Proceedings of the 2004 Conference on Computer Vision and Pattern Recognition Workshop (CVPRW'04), Volume 10: Workshop on Real-Time Vision for Human-Computer Interaction (RTV4HCI), Washington, D.C., pp. 159-166, Washington, D.C., June 2004, pdf. A revised version of the paper appeared under the title "A Real-Time Vision Interface Based on Gaze Detection -- EyeKeys" in "Real-Time Vision for Human-Computer Interaction," Kisacanin, Branislav; Pavlovic, Vladimir; Huang, Thomas S. (Eds.) 2005, pages 141-157, Springer-Verlag. Abstract. pdf.

A very different approach to eye gaze detection was developed by Betke and Kawai in 1999. They proposed an unsupervised learning algorithm to estimate the position of the pupil with respect to the center of the eye. The algorithm creates a map of self-organized gray-scale subimages that collectively learn to represent the outline of the eye:

M. Betke and J. Kawai, "Gaze Detection via Self-Organizing Gray-Scale Units." Proceedings of the International Workshop on Recognition, Analysis, and Tracking of Faces and Gestures in Real-Time Systems, pp. 70-76, Kerkyra, Greece, September 1999. pdf.

More than a decade ago,

Margrit Betke and her students Bill Mullally and John Magee developed

a real-time eye detection and tracking system. They proposed two

interesting strategies:

More than a decade ago,

Margrit Betke and her students Bill Mullally and John Magee developed

a real-time eye detection and tracking system. They proposed two

interesting strategies:

M. Betke, B. Mullally, J. Magee, "Active Detection of Eye Scleras in Real Time." Proceedings of the IEEE CVPR Workshop on Human Modeling, Analysis and Synthesis, Hilton Head Island, SC, June 2000. pdf.

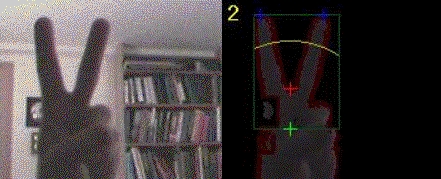

The group extended the model to Dynamic Hidden-State Shape Models (DHSSMs) to track and recognize the non-rigid motion of objects with structural shape variation, in particular, human hands. Betke's student Zheng Wu conducted experiments that showed that the proposed method can recognize the digits of a hand while the fingers are being moved and curled to various degrees. The method was also shown to be robust to various illumination conditions, the presence of clutter, occlusions, and some types of self-occlusions.

J. Wang, V. Athitsos, S. Sclaroff, and M. Betke. "Detecting Objects of Variable Shape Structure with Hidden State Shape Models." IEEE Transactions on Pattern Analysis and Machine Intelligence. 30(3):477-492, March 2008. Abstract, pdf. Videos.Z. Wu, M. Betke, J. Wang, V. Athitsos, and S. Sclaroff, "Tracking with Dynamic Hidden-State Shape Models," Computer Vision - ECCV 2008, 10th European Conference on Computer Vision, Marseille, France, October 12-18, 2008, Proceedings, Part I, LNCS 5302, D. Forsyth, P. Torr, and A. Zisserman (editors), pages 643-656, Springer-Verlag. pdf.

Finger Counter is a computer-vision system that counts the numbers of

fingers held up in front of a video camera in real time. The system is

designed as a simple and universal human-computer interface: potential

applications include educational tools for young children and

supplemental input devices, particularly for persons with

disabilities. The interface is language independent and requires

minimal education and computer literacy.

Finger Counter uses background differencing and edge detection to

locate the outline of the hand. The system then processes the

polar-coordinate representation of the pixels on the outline to

identify and count fingers: fingers are recognized as protrusions that

meet particular requirements. The system logs the frequency of

different inputs over a given time interval.

Betke's student Stephen Crampton implemented the Finger Counter

interface under Linux using Video4Linux and also under Microsoft

Windows as a DirectShow filter. The system was tested extensively

under various lighting and background conditions. During testing, the

system successfully counted the fingers of numerous subjects with

disparate hand shapes and sizes and skin color. Crampton also

incorporated the Finger Counter interface into a children's game for

learning and entertainment.

Finger Counter is a computer-vision system that counts the numbers of

fingers held up in front of a video camera in real time. The system is

designed as a simple and universal human-computer interface: potential

applications include educational tools for young children and

supplemental input devices, particularly for persons with

disabilities. The interface is language independent and requires

minimal education and computer literacy.

Finger Counter uses background differencing and edge detection to

locate the outline of the hand. The system then processes the

polar-coordinate representation of the pixels on the outline to

identify and count fingers: fingers are recognized as protrusions that

meet particular requirements. The system logs the frequency of

different inputs over a given time interval.

Betke's student Stephen Crampton implemented the Finger Counter

interface under Linux using Video4Linux and also under Microsoft

Windows as a DirectShow filter. The system was tested extensively

under various lighting and background conditions. During testing, the

system successfully counted the fingers of numerous subjects with

disparate hand shapes and sizes and skin color. Crampton also

incorporated the Finger Counter interface into a children's game for

learning and entertainment.

S. C. Crampton and M. Betke, "Finger Counter: A Human-Computer Interface." Accepted at the 7th ERCIM Workshop "User Interfaces for All," UI4ALL 2002, pp. 195-196, Paris, France, October 2002.S. Crampton, M. Betke, "Counting Fingers in Real Time: A Webcam-Based Human-Computer Interface with Game Applications," Proceedings of the Conference on Universal Access in Human-Computer Interaction, affiliated with HCI International 2003, pp. 1357-1361, Crete, Greece, June 2003. pdf.

T. Castelli, M. Betke, and C. Neidle, "Facial Feature Tracking and Occlusion Processing in American Sign Language"

Please visit our ASL web page.