Profs. Betke and Sclaroff and their students proposed a new method for

object detection and tracking. The method, described in IEEE

Trans. PAMI 2008, uses Hidden State Shape Models (HSSMs), a

generalization of Hidden Markov Models, which were introduced to model

object classes of variable shape structure, such as hands, using a

probabilistic framework. The proposed polynomial inference algorithm

automatically determines hand location, orientation, scale and finger

structure by finding the globally optimal registration of model states

with the image features, even in the presence of clutter. Betke's

student Jingbin Wang conducted experiments that showed that the

proposed method is significantly more accurate than previously

proposed methods based on chamfer-distance matching.

Profs. Betke and Sclaroff and their students proposed a new method for

object detection and tracking. The method, described in IEEE

Trans. PAMI 2008, uses Hidden State Shape Models (HSSMs), a

generalization of Hidden Markov Models, which were introduced to model

object classes of variable shape structure, such as hands, using a

probabilistic framework. The proposed polynomial inference algorithm

automatically determines hand location, orientation, scale and finger

structure by finding the globally optimal registration of model states

with the image features, even in the presence of clutter. Betke's

student Jingbin Wang conducted experiments that showed that the

proposed method is significantly more accurate than previously

proposed methods based on chamfer-distance matching.

The group extended the model to Dynamic Hidden-State Shape Models (DHSSMs) to track and recognize the non-rigid motion of objects with structural shape variation, in particular, human hands. Betke's student Zheng Wu conducted experiments that showed that the proposed method can recognize the digits of a hand while the fingers are being moved and curled to various degrees. The method was also shown to be robust to various illumination conditions, the presence of clutter, occlusions, and some types of self-occlusions.

J. Wang, V. Athitsos, S. Sclaroff, and M. Betke. "Detecting Objects of Variable Shape Structure with Hidden State Shape Models." IEEE Transactions on Pattern Analysis and Machine Intelligence. 30(3):477-492, March 2008. Abstract, pdf. Videos.Z. Wu, M. Betke, J. Wang, V. Athitsos, and S. Sclaroff, "Tracking with Dynamic Hidden-State Shape Models," Computer Vision - ECCV 2008, 10th European Conference on Computer Vision, Marseille, France, October 12-18, 2008, Proceedings, Part I, LNCS 5302, D. Forsyth, P. Torr, and A. Zisserman (editors), pages 643-656, Springer-Verlag. pdf.

Jingbin Wang, Erdan Gu, and Margrit Betke proposed a method that

combines shape-based object recognition and image segmentation

techniques. Given a shape prior represented in a multiscale curvature

form, the proposed method identifies the target objects in images by

grouping oversegmented image regions. The problem is formulated in a

unified probabilistic framework, and object segmentation and

recognition are accomplished simultaneously by a stochastic Markov

Chain Monte Carlo (MCMC) mechanism. Within each sampling move during

the simulation process, probabilistic region grouping operations are

influenced by both the image information and the shape similarity

constraint. The latter constraint is measured by a partial shape

matching process. A generalized cluster sampling algorithm combined

with a large sampling jump and other implementation improvements,

greatly speeds up the overall stochastic process. The proposed method

supports the segmentation and recognition of multiple occluded objects

in images. Betke's student Jingbin Wang conducted experiments using

both synthetic and real images.

Jingbin Wang, Erdan Gu, and Margrit Betke proposed a method that

combines shape-based object recognition and image segmentation

techniques. Given a shape prior represented in a multiscale curvature

form, the proposed method identifies the target objects in images by

grouping oversegmented image regions. The problem is formulated in a

unified probabilistic framework, and object segmentation and

recognition are accomplished simultaneously by a stochastic Markov

Chain Monte Carlo (MCMC) mechanism. Within each sampling move during

the simulation process, probabilistic region grouping operations are

influenced by both the image information and the shape similarity

constraint. The latter constraint is measured by a partial shape

matching process. A generalized cluster sampling algorithm combined

with a large sampling jump and other implementation improvements,

greatly speeds up the overall stochastic process. The proposed method

supports the segmentation and recognition of multiple occluded objects

in images. Betke's student Jingbin Wang conducted experiments using

both synthetic and real images.

J. Wang, E. Gu, and M. Betke. "MosaicShape: Stochastic Region Grouping with Shape Prior." Proceedings of the IEEE Computer Society International Conference on Computer Vision and Pattern Recognition (CVPR 2005), pp. 902-908, San Diego, CA, USA, June 2005. Abstract. Pdf file.

Sam Epstein and Margrit Betke extended the Semi-least Squares problem

defined by Rao and Mitra in 1971 to the Kernel Semi-least Squares

problem. They introduced ``subset projection,'' a technique that

produces a solution to this problem. They showed how the results of

such a subset projection can be used to approximate a computationally

expensive distance metric. The general problem of distance metric

learning is common in real-world applications such as computer vision

and content retrieval. The proposed variant of the distance learning

problem has particular applications to exemplar-based video tracking.

Epstein and Betke developed the Kernel-Subset-Tracker, which

determines the distance (similarity) of the tracked object to each

training image of the object. From these distances, a template is

created and used to find the next position of the object in the video

frame. Experiments with test subjects show that augmenting the Camera

Mouse with the Kernel-Subset-Tracker improves communication bandwidth

statistically significantly. Tracking of facial features was

accurate, without feature drift, even during rapid head movements and

extreme head orientations. The Camera Mouse augmented with the

Kernel-Subset-Tracker enabled a stroke-victim with severe motion

impairments to communicate via an on-screen keyboard.

Sam Epstein and Margrit Betke extended the Semi-least Squares problem

defined by Rao and Mitra in 1971 to the Kernel Semi-least Squares

problem. They introduced ``subset projection,'' a technique that

produces a solution to this problem. They showed how the results of

such a subset projection can be used to approximate a computationally

expensive distance metric. The general problem of distance metric

learning is common in real-world applications such as computer vision

and content retrieval. The proposed variant of the distance learning

problem has particular applications to exemplar-based video tracking.

Epstein and Betke developed the Kernel-Subset-Tracker, which

determines the distance (similarity) of the tracked object to each

training image of the object. From these distances, a template is

created and used to find the next position of the object in the video

frame. Experiments with test subjects show that augmenting the Camera

Mouse with the Kernel-Subset-Tracker improves communication bandwidth

statistically significantly. Tracking of facial features was

accurate, without feature drift, even during rapid head movements and

extreme head orientations. The Camera Mouse augmented with the

Kernel-Subset-Tracker enabled a stroke-victim with severe motion

impairments to communicate via an on-screen keyboard.

S. Epstein and M. Betke. Active Hidden Models for Tracking with Kernel Projections, Department of Computer Science Technical Report BUCS-2009-006, Boston University. March 10, 2009. Abstract. pdf. ps.

S. Epstein and M. Betke. The Kernel Semi-least Squares Method for Distance Approximation. Manuscript under review. September 2010.

S. Epstein, E. Missimer, and M. Betke. Improving the Camera Mouse with the Kernel-Subset-Tracker. Unpublished manuscript. September 2010.

Margrit Betke, in collaboration with Eran Naftali and Nick Makris,

derived analytic conditions that are necessary for the maximum

likelihood estimate to become asymptotically unbiased and attain

minimum variance for estimation problems in computer vision. In

particular, problems of estimating the parameters that describe the 3D

structure of rigid objects or their motion are investigated. It is

common practice to compute Cramer-Rao lower bounds (CRLB) to

approximate the mean-square error in parameter estimation problems,

but the CRLB is not guaranteed to be a tight bound and typically

underestimates the true mean-square error. The necessary conditions

for the Cramer-Rao lower bound to be a good approximation of the

mean-square error are derived. The tightness of the bound depends on

the noise level, the number of pixels on the surface of the object,

and the texture of the surface. We examine our analytical results

experimentally using polyhedral objects that consist of planar surface

patches with various textures that move in 3D space. We provide

necessary conditions for the CRLB to be attained that depend on the

size, texture, and noise level of the surface patch.

Margrit Betke, in collaboration with Eran Naftali and Nick Makris,

derived analytic conditions that are necessary for the maximum

likelihood estimate to become asymptotically unbiased and attain

minimum variance for estimation problems in computer vision. In

particular, problems of estimating the parameters that describe the 3D

structure of rigid objects or their motion are investigated. It is

common practice to compute Cramer-Rao lower bounds (CRLB) to

approximate the mean-square error in parameter estimation problems,

but the CRLB is not guaranteed to be a tight bound and typically

underestimates the true mean-square error. The necessary conditions

for the Cramer-Rao lower bound to be a good approximation of the

mean-square error are derived. The tightness of the bound depends on

the noise level, the number of pixels on the surface of the object,

and the texture of the surface. We examine our analytical results

experimentally using polyhedral objects that consist of planar surface

patches with various textures that move in 3D space. We provide

necessary conditions for the CRLB to be attained that depend on the

size, texture, and noise level of the surface patch.

M. Betke, E. Naftali and N. C. Makris, "Necessary Conditions to Attain Performance Bounds on Structure and Motion Estimates of Rigid Objects," Proceedings of the IEEE Computer Vision and Pattern Recognition Conference (CVPR 2001) Vol. 1, pp. 448-455, Kauai, Hawaii, December 2001. pdf.

The problem of recognizing objects subject to affine transformation in images is examined from a physical perspective using the theory of statistical estimation. Focusing first on objects that occlude zero-mean scenes with additive noise, we derive the Cramer-Rao lower bound on the mean-square error in an estimate of the six-dimensional parameter vector that describes an object subject to affine transformation and so generalize the bound on one-dimensional position error previously obtained in radar and sonar pattern recognition. We then derive two useful descriptors from the object?s Fisher information that are independent of noise level. The first is a generalized coherence scale that has great practical value because it corresponds to the width of the object?s autocorrelation peak under affine transformation and so provides a physical measure of the extent to which an object can be resolved under affine parameterization. The second is a scalar measure of an object?s complexity that is invariant under affine transformation and can be used to quantitatively describe the ambiguity level of a general 6-dimensional affine recognition problem. This measure of complexity has a strong inverse relationship to the level of recognition ambiguity. We then develop a method for recognizing objects subject to affine transformation imaged in thousands of complex real-world scenes. Our method exploits the resolution gain made available by the brightness contrast between the object perimeter and the scene it partially occludes. The level of recognition ambiguity is shown to decrease exponentially with increasing object and scene complexity. Ambiguity is then avoided by conditioning the permissible range of template complexity above a priori thresholds. Our method is statistically optimal for recognizing objects that occlude scenes with zero-mean background.

M. Betke and N. Makris, "Recognition, Resolution and Complexity of Objects Subject to Affine Transformation." International Journal of Computer Vision , 44:1, pp. 5-40, August 2001. pdf.

M. Betke and N. Makris, "Information-Conserving Object Recognition." Proceedings of the Sixth International Conference on Computer Vision, pp. 145-152, Bombay, India, January 1998. pdf.

M. Betke and N. Makris, "Fast Object Recognition in Noisy Images Using Simulated Annealing." Proceedings of the Fifth International Conference on Computer Vision, pp. 523-530, June 1995. pdf.

The project started at the University of Maryland, where Margrit Betke was a postdoctoral researcher, working with Larry S. Davis and Esin Haritaoglu. She later collaborated with Huan Nguyen from Boston College on some extensions of the project. Her student William Mullally eventually started a project on analyzing the face and eyes of a driver.

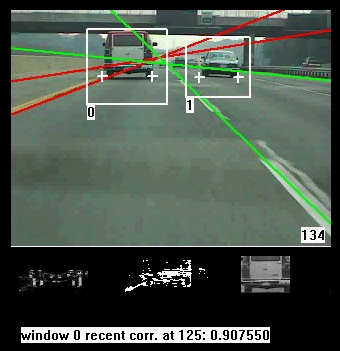

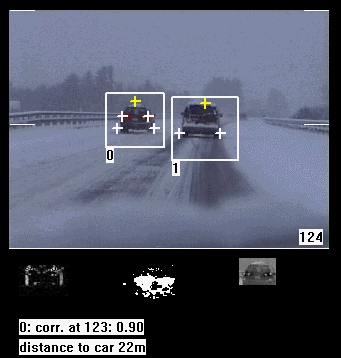

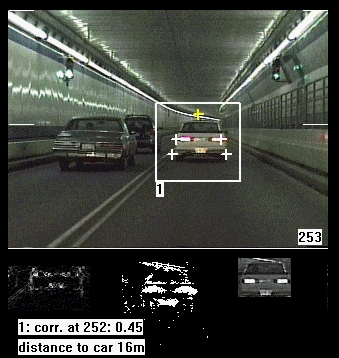

In these videos, each detected car is automatically annotated with a white frame. Each detected object that is potentially a car is annotated with a black frame. The linear approximations of lane markers are used to establish regions of interest for the car detector. Cars in front are detected reliably. Cars in other lanes can be detected reliably only in highway scenes or scenes with sufficient visibility. In the tunnel sequence, the apparent size of passing cars is larger than the modeled size. Passing cars are thus not detected correctly.

B. Mullally, M. Betke, "Preliminary Investigation of Real-Time Monitoring of a Driver in City Traffic." Proceedings of the IEEE International Conference on Intelligent Vehicles, Dearborn, MI, October 2000. pdf (color), pdf (IEEE version), ps.Z, ps.

M. Betke, E. Haritaoglu and L. S. Davis, "Real-Time Multiple Vehicle Detection and Tracking from a Moving Vehicle." Machine Vision and Applications, 12:2, pp. 69-83, September 2000. pdf (restricted access).

M. Betke and H. Nguyen, "Highway Scene Analysis from a Moving Vehicle under Reduced Visibility Conditions," IEEE International Conference on Intelligent Vehicles, Stuttgart, Germany, October 1998. pdf (color).

M. Betke, E. Haritaoglu and L. Davis, "Highway Scene Analysis." Proceedings of the IEEE Conference on Intelligent Transportation Systems, ITSC'97, pp. 812-817, Boston, November 1997. pdf.

M. Betke, E. Haritaoglu and L. Davis, "Highway Scene Analysis in Hard Real Time" Proceedings of the IEEE Conference on Intelligent Transportation Systems, ITSC'97, page 367, Boston, November 9-12, 1997. Abstract.

M. Betke, E. Haritaoglu and L. S. Davis, "Multiple Vehicle Detection and Tracking in Hard Real Time." Proceedings of the 1996 IEEE Intelligent Vehicles Symposium, pp. 351-356, Seikei University, Tokyo, Japan, September 19-20, 1996. pdf.