# for QR codes use inline

# %matplotlib inline

# qr_setting = 'url'

# qrviz_setting = 'show'

#

# for lecture use notebook

%matplotlib inline

qr_setting = None

#

%config InlineBackend.figure_format='retina'

# import libraries

import numpy as np

import matplotlib as mp

import pandas as pd

import matplotlib.pyplot as plt

import laUtilities as ut

import slideUtilities as sl

import demoUtilities as dm

import pandas as pd

from importlib import reload

from datetime import datetime

from IPython.display import Image

from IPython.display import display_html

from IPython.display import display

from IPython.display import Math

from IPython.display import Latex

from IPython.display import HTML;

# Animation Notes

#

from matplotlib import animation

#

# two methods for animation - see:

# http://louistiao.me/posts/notebooks/embedding-matplotlib-animations-in-jupyter-as-interactive-javascript-widgets/

# 1 - directly inline via %matplotlib notebook - advantage: real-time, disadvantage: no manual control

# 2 - encode as video, embedded into notebook (base64) and display via notebook player or JS widget

# downside - not real time, need to generate complete video before it can be displayed

# 2a - HTML(anim.to_html5_video()) [notebook player, bare bones] [uses html5 <video> tag]

# 2b - HTML(anim.to_jshtml()) [JS widget, extra controls]

# these latter can be made default representation via next line, which can also be 'html5'

mp.rcParams['animation.html'] = 'jshtml'

# 3 - save as an animated GIF and display using

# rot_animation.save('rotation.gif', dpi=80, writer='imagemagick')

#

# see also https://stackoverflow.com/questions/35532498/animation-in-ipython-notebook/46878531#46878531

Symmetric Matrices¶

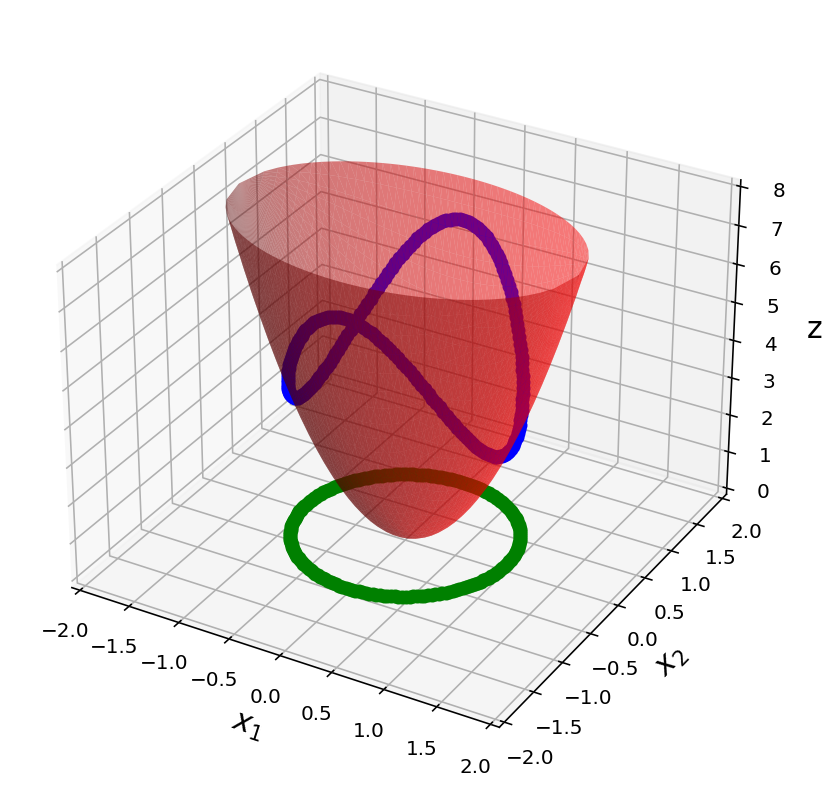

fig = ut.three_d_figure((24, 5),

'Intersection of the positive definite quadratic form z = 3 x1^2 + 7 x2 ^2 with the constraint ||x|| = 1',

-2, 2, -2, 2, 0, 8,

equalAxes = False, figsize = (7, 7), qr = qr_setting)

qf = np.array([[3., 0.],[0., 7.]])

for angle in np.linspace(0, 2*np.pi, 200):

x = np.array([np.cos(angle), np.sin(angle)])

z = x.T @ qf @ x

fig.plotPoint(x[0], x[1], z, 'b')

fig.plotPoint(x[0], x[1], 0, 'g')

fig.plotQF(qf, alpha=0.5)

fig.ax.set_zlabel('z')

fig.desc['zlabel'] = 'z'

# do not call fig.save here

Today we’ll study a very important class of matrices: symmetric matrices.

We’ll see that symmetric matrices have properties that relate to both eigendecomposition, and orthogonality.

Furthermore, symmetric matrices open up a broad class of problems we haven’t yet touched on: constrained optimization.

As a result, symmetric matrices arise very often in applications.

Definition. A symmetric matrix is a matrix \(\,A\) such that \(\;A^T = A\).

Clearly, such a matrix is square.

Furthermore, the entries that are not on the diagonal come in pairs, on opposite sides of the diagonal.

Example. Here are three symmetric matrices:

Here are three nonsymmetric matrices:

Orthogonal Diagonalization¶

At first glance, a symmetric matrix may not seem that special!

But in fact symmetric matrices have a number of interesting properties.

First, we’ll look at a remarkable fact:

Example. Diagonalize the following symmetric matrix:

Solution.

The characteristic equation of \(A\) is

So the eigenvalues are 8, 6, and 3.

We construct a basis for each eigenspace (using our standard method of finding the nullspace of \(A-\lambda I\)):

These three vectors form a basis for \(\mathbb{R}^3.\)

But here is the remarkable thing: these three vectors form an orthogonal set.

That is, any two eigenvectors are orthogonal.

For example,

As a result, \(\{\mathbf{v}_1, \mathbf{v}_2, \mathbf{v}_3\}\) form an orthogonal basis for \(\mathbb{R}^3.\)

Let’s normalize these vectors so they each have length 1:

The orthogonality of the eigenvectors of a symmetric matrix is quite important.

To see this, let’s write the diagonalization of \(A\) in terms of these eigenvectors and eigenvalues:

Then, \(A = PDP^{-1},\) as usual.

But, here is the interesting thing:

\(P\) is square and has orthonormal columns.

So \(P\) is an orthogonal matrix.

So, that means that \(P^{-1} = P^T.\)

So, \(A = PDP^T.\)

The Spectral Theorem¶

Here is a theorem that shows that we always get orthogonal eigenvectors when we diagonalize a symmetric matrix:

Theorem. If \(A\) is symmetric, then any two eigenvectors of \(A\) from different eigenspaces are orthogonal.

Proof.

Let \(\mathbf{v}_1\) and \(\mathbf{v}_2\) be eigenvectors that correspond to distinct eigenvalues, say, \(\lambda_1\) and \(\lambda_2.\)

To show that \(\mathbf{v}_1^T\mathbf{v}_2 = 0,\) compute

So we conclude that \(\lambda_1(\mathbf{v}_1^T\mathbf{v}_2) = \lambda_2(\mathbf{v}_1^T\mathbf{v}_2).\)

But \(\lambda_1 \neq \lambda_2,\) so this can only happen if \(\mathbf{v}_1^T\mathbf{v}_2 = 0.\)

So \(\mathbf{v}_1\) is orthogonal to \(\mathbf{v}_2.\)

The same argument applies to any other pair of eigenvectors with distinct eigenvalues.

So any two eigenvectors of \(A\) from different eigenspaces are orthogonal.

We can now introduce a special kind of diagonalizability:

An \(n\times n\) matrix is said to be orthogonally diagonalizable if there are an orthogonal matrix \(P\) (with \(P^{-1} = P^T\)) and a diagonal matrix \(D\) such that

Such a diagonalization requires \(n\) linearly independent and orthonormal eigenvectors.

When is this possible?

If \(A\) is orthogonally diagonalizable, then

So \(A\) is symmetric!

That is, whenever \(A\) is orthogonally diagonalizable, it is symmetric.

It turns out the converse is true (though we won’t prove it).

Hence we obtain the following theorem:

Theorem. An \(n\times n\) matrix is orthogonally diagonalizable if and only if it is a symmetric matrix.

Remember that when we studied diagonalization, we found that it was a difficult process to determine if an arbitrary matrix was diagonalizable.

But here, we have a very nice rule: every symmetric matrix is (orthogonally) diagonalizable.

Quadratic Forms¶

An important role that symmetric matrices play has to do with quadratic expressions.

Up until now, we have mainly focused on linear equations – equations in which the \(x_i\) terms occur only to the first power.

Actually, though, we have looked at some quadratic expressions when we considered least-squares problems.

For example, we looked at expressions such as \(\Vert x\Vert^2\) which is \(\sum x_i^2.\)

We’ll now look at quadratic expressions generally. We’ll see that there is a natural and useful connection to symmetric matrices.

Definition. A quadratic form is a function of variables, eg, \(x_1, x_2, \dots, x_n,\) in which every term has degree two.

Examples:

\(4x_1^2 + 2x_1x_2 + 3x_2^2\) is a quadratic form.

\(4x_1^2 + 2x_1\) is not a quadratic form.

Quadratic forms arise in many settings, including signal processing, physics, economics, and statistics.

In computer science, quadratic forms arise in optimization and graph theory, among other areas.

Essentially, what an expression like \(x^2\) is to a scalar, a quadratic form is to a vector.

Fact. Every quadratic form can be expressed as \(\mathbf{x}^TA\mathbf{x}\), where \(A\) is a symmetric matrix.

There is a simple way to go from a quadratic form to a symmetric matrix, and vice versa.

To see this, let’s look at some examples.

Example. Let \(\mathbf{x} = \begin{bmatrix}x_1\\x_2\end{bmatrix}.\) Compute \(\mathbf{x}^TA\mathbf{x}\) for the matrix \(A = \begin{bmatrix}4&0\\0&3\end{bmatrix}.\)

Solution.

Example. Compute \(\mathbf{x}^TA\mathbf{x}\) for the matrix \(A = \begin{bmatrix}3&-2\\-2&7\end{bmatrix}.\)

Solution.

Notice the simple structure: $\(a_{11} \mbox{ is multiplied by } x_1 x_1\)\( \)\(a_{12} \mbox{ is multiplied by } x_1 x_2\)\( \)\(a_{21} \mbox{ is multiplied by } x_2 x_1\)\( \)\(a_{22} \mbox{ is multiplied by } x_2 x_2\)$

We can write this concisely:

Example. For \(\mathbf{x}\) in \(\mathbb{R}^3\), let

Write this quadratic form \(Q(\mathbf{x})\) as \(\mathbf{x}^TA\mathbf{x}\).

Solution.

The coefficients of \(x_1^2, x_2^2, x_3^2\) go on the diagonal of \(A\).

Based on the previous example, we can see that the coefficient of each cross term \(x_ix_j\) is the sum of two values in symmetric positions on opposite sides of the diagonal of \(A\).

So to make \(A\) symmetric, the coefficient of \(x_ix_j\) for \(i\neq j\) must be split evenly between the \((i,j)\)- and \((j,i)\)-entries of \(A\).

You can check that

Question Time! Q24.1

Classifying Quadratic Forms¶

Notice that \(\mathbf{x}^TA\mathbf{x}\) is a scalar.

In other words, when \(A\) is an \(n\times n\) matrix, the quadratic form \(Q(\mathbf{x}) = \mathbf{x}^TA\mathbf{x}\) is a real-valued function with domain \(\mathbb{R}^n\).

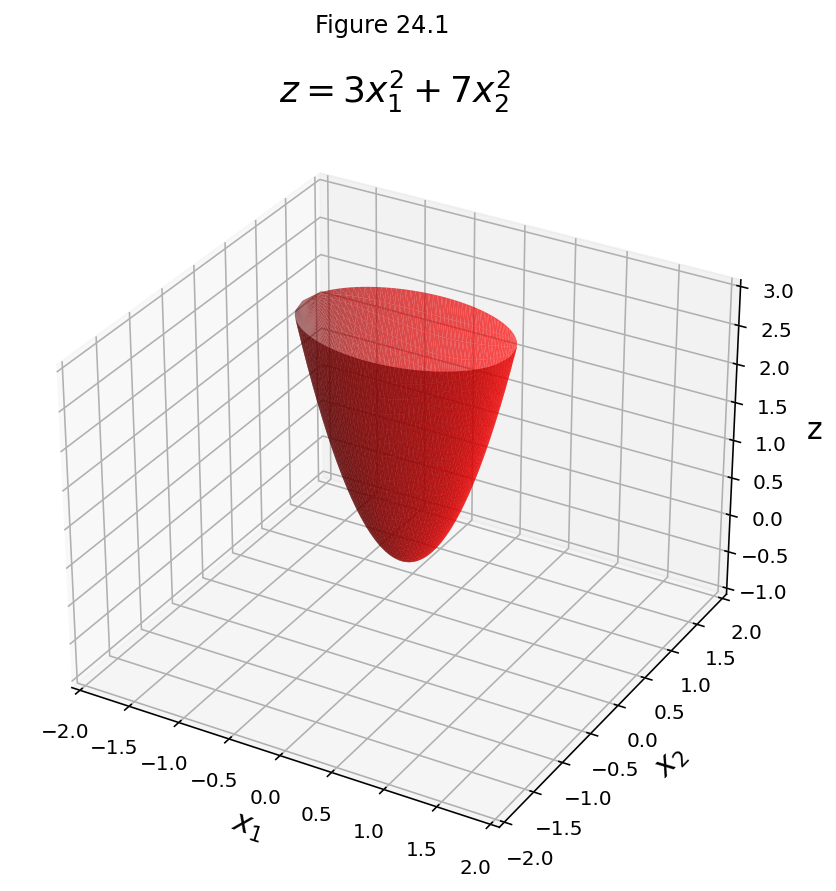

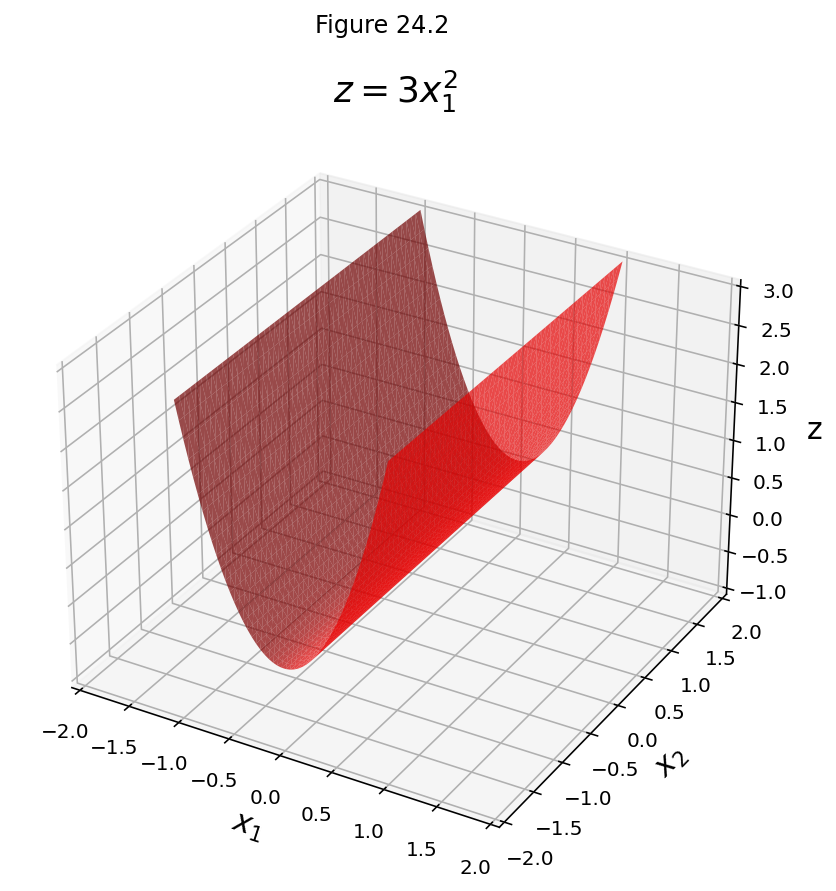

Here are four quadratic forms with domain \(\mathbb{R}^2\).

Notice that except at \(\mathbf{x}=\mathbf{0},\) the values of \(Q(\mathbf{x})\) are all positive in the first case, and all negative in the last case.

fig = ut.three_d_figure((24, 1), 'z = 3 x1^2 + 7 x2 ^2',

-2, 2, -2, 2, -1, 3,

figsize = (7, 7), qr = qr_setting)

qf = np.array([[3., 0.],[0., 7.]])

fig.plotQF(qf, alpha = 0.7)

fig.ax.set_zlabel('z')

fig.desc['zlabel'] = 'z'

fig.set_title(r'$z = 3x_1^2 + 7x_2^2$', 'z = 3x_1^2 + 7x_2^2', size = 18)

fig.save();

fig = ut.three_d_figure((24, 2), 'z = 3 x1^2',

-2, 2, -2, 2, -1, 3,

figsize = (7, 7), qr = qr_setting)

qf = np.array([[3., 0.],[0., 0.]])

fig.plotQF(qf, alpha = 0.7)

fig.ax.set_zlabel('z')

fig.desc['zlabel'] = 'z'

fig.set_title(r'$z = 3x_1^2$', 'z = 3x_1^2', size = 18)

fig.save();

fig.rotate()